Table of contents

Ever wondered how apps like Facebook, Twitter, Zomato, Hotstar etc works behind the scene ? These apps works smoothly, even when millions of users are using concurrently.

System design is one such process of defining the architecture, interfaces, and data for a system based on a business requirement.

A good system design requires you to think about everything in an infrastructure, from the hardware and software, cloud, all the way down to the data and how it’s stored.

The goal of this system design primer series is to explain the basics of system design so that you don’t feel left out in engineering discussion.

So, before we deep dive into the different topic of design fundaments, let’s learn about the key characteristics of a good system. Design fundamentals are the key concepts you need to know before you start to build efficient, robust and scalable software.

Key characteristics of system

1. Latency

Latency is the amount of time it takes for data to travel from one to another point on network. It is always measured in units of time ( i.e hours, minutes, seconds, nanoseconds)

To understand it in simple terms, suppose you make an API request from client at 12:00:00 am and receive the response back at 12:00:01 am. So, you can say latency of API is 1sec ( 1000 ms).

💡 Latency ⇒ response time - request time = 1sec2. Availability

Availability refers to the percentage of time the system/infrastructure remains operational. In other words availability is measure of how resilient the system is to failures.

Companies like AWS, Google, Facebook, Instagram, Twitter have very high availability and we don’t hear the news of downtime very often. They also have separate website to show the availability status of their systems. Example - Razorpay Status Page, Google Cloud Status

In the coming blogs of series, we will learn how we can design high availability system and minimize points of failure.

Helpful links for designing high availability solutions

3. Throughput

Throughput is defined as total number of items processed per unit of time ( eg. second ). We generally use terms to term to say, “our X api has throughput of 10k/sec”, this means X api can handle 10k HTTP requests per second.

While designing a software, the goal should be to achieve High Throughput with Low Latency.

4. Concurrency

Technology helps us do things at scale. And, with concurrency you can make sure you are able to deliver your product to millions of users at any point of time. Concurrency simply means multiple operations are happening at same time.

Example of concurrency :

- Website must handle multiple simultaneous users.

- multiple application running on one single computer.

When designing a system, you should be aware that how much concurrent users your system can handle with certain resources.

You can read more about concurrency here - Link

5. Scalablity

Remember the IRCTC Tatkal booking system, I think you must have tried once in your life to book a Tatkal ticket, you might have been successful in booking a slot. Here I want to talk the how that system works, System is designed well enough to handle the sudden increase in server load during the first few minutes of booking window. Even though, the server get crashed sometimes, but most of time you see always see a loader on frontend ( increased latency ) which the server experience due to sudden spike.

So, Scalability is the measure of how well the system a respond to incoming requests by adding or removing resources to meet demands without sacrificing latency. Scaling means your cloud infrastructure should be powerful enough to handle the increased traffic load.

There are two types of scalability

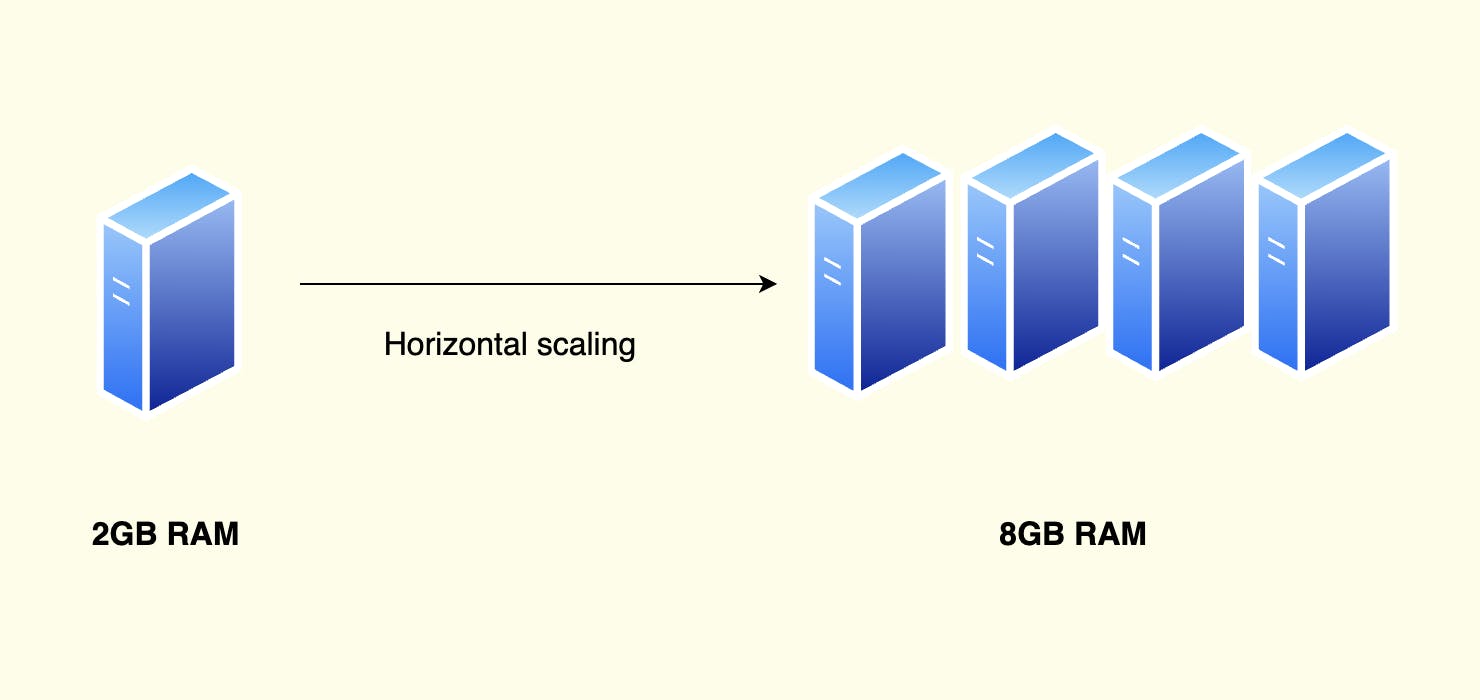

- Horizontal Scaling (Adding more number of machines in parallel )

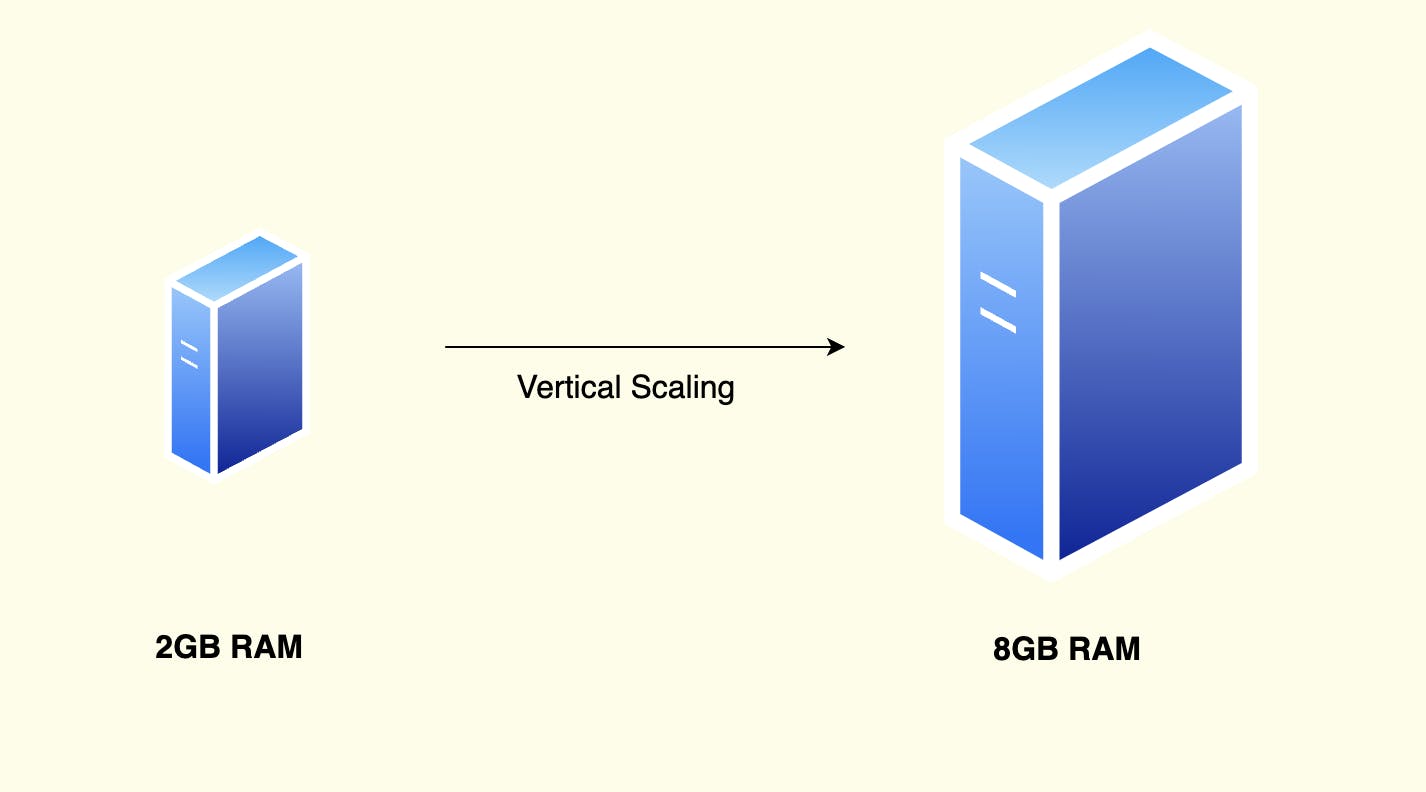

- Vertical Scaling (Adding a single machine of increased power )

We will learn more about horizontal and vertical scalability in next blog. Stay tuned !